Mistral Medium 3 offers state-of-the-art coding, reasoning, and multimodal capabilities at around one-eighth the cost of top competitors.

Introduction

Mistral Medium 3 is a commercial multimodal language model released in May 2025. It takes text and image as input, offers a whopping 128k-token window of context, and does amazingly well for professional applications like coding, data analysis, and document understanding. It runs best on just four GPUs in cloud or on-premises deployment, and is available via Mistral’s API or platforms including Amazon SageMaker, Azure AI Foundry, IBM watsonx.ai and NVIDIA NIM.

Enterprise‑Grade

Cost‑Efficient

Multimodal

On‑Premise Friendly

Review

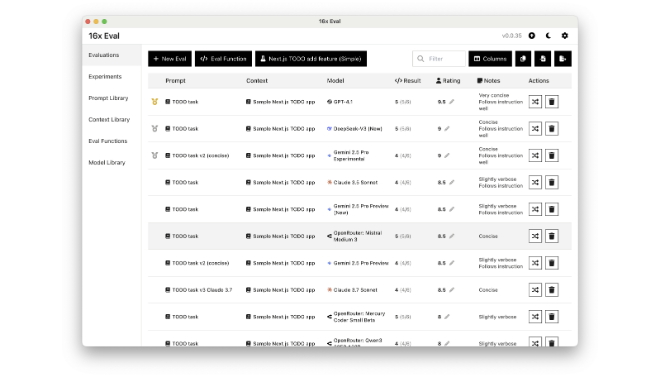

Mistral Medium 3 offers state-of-the-art coding, reasoning, and multimodal capabilities at around one-eighth the cost of top competitors. Enterprise‑grade with hybrid and on‑premises support, it finds power, price, and flexibility in harmony. Critics describe it as offering “frontier‑class” accuracy at radically reduced cost, with quality performance similar to premium ones such as Claude Sonnet 3.7 and surpassing several open counterparts.

Features

Frontier‑Class Performance

Scores ≥ 90% of Claude Sonnet 3.7 on coding and STEM benchmarks, outperform models like Llama 4 Maverick and Cohere Command A.

Multimodal & Large Context

Text + images included, accommodates documents up to 128k tokens (~192 A4 pages).

Enterprise Deployment Flexibility

Deploy hybrid cloud or all on‑premises with at least four GPUs for secure, low‑latency deployment.

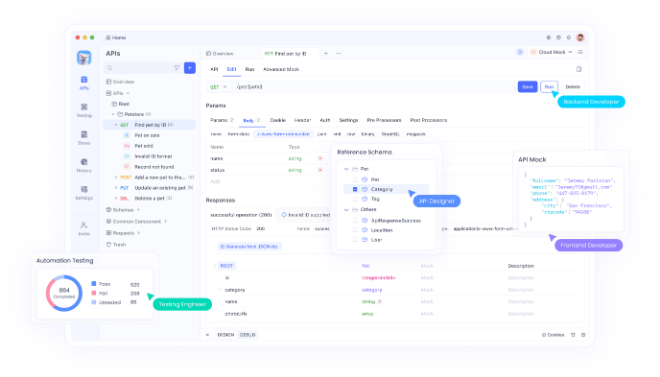

API & Ecosystem Integrations

Accessible via Mistral platform, Amazon SageMaker, Azure Foundry, IBM watsonx.ai, NVIDIA NIM, and Google Vertex AI.

Fine‑Tuning & Post‑Training

Enterprise consumers can post‑train or fine‑tune the model to fit internal data and workflows.

Best Suited for

Software Engineers

Rapid code generation, function calls, in-IDE debugging.

Data Analysts & Researchers

Ingestion of long documents and multimodal data for summarization and extraction.

Enterprise IT & Compliance Teams

Having sensitive data under control, on‑premise deployment enables regulatory compliance.

AI‑Driven Enterprises

Banking, healthcare, energy firms using bespoke AI agents and automations.

Strengths

Excellent performance‑to‑cost ratio – ~$0.40 input / $2 output per million tokens, offers ~90% premium model quality.

Excellent multimodal and long‑context processing.

Business agility with multiple deployment modalities and platform integrations.

Weakness

Proprietary weights aren’t open‑source, limiting transparency over the likes of Magistral Small.

Around slower than average in token processing rate (~77 tokens/sec), though latency is comparable (first token ~0.39 s).

Getting started with: step by step guide

Getting started with Mistral is easy:

Step 1: Choose Deployment Mode

Choose either hosted usage via Mistral API or self-hosted in cloud/on-premises with ≥4 GPUs.

Step 2: Access the Model

Register at Mistral AI and obtain API key. Or deploy on IBM watsonx, AWS SageMaker, Azure Foundry, Google Vertex, or NVIDIA NIM.

Step 3: Use Correct Model Endpoint

Call mistral-medium-2505 or call alias mistral-medium-latest using API for stability.

Step 4: Integrate Multimodal Inputs

Pass text and images in task requests like code generation, image captioning, or document Q&A.

Step 5: Optimize Usage

Control costs via pricing model ($0.40 input / $2 output per million tokens). Batching and context window adjustment are considerations.

Step 6: Customize & Fine‑Tune

Contact Mistral enterprise team to explore custom post-training or fine-tuning on in-house datasets.

Frequently Asked Questions

Q: Is Mistral Medium 3 free?

A: No, it is proprietary. Pay‑per‑use pricing: $0.40 input / $2 output per million tokens. Self‑hosting on ≥4 GPUs requires your own infra.

Q: Can I use it on-premises?

A: Yes, it supports a minimum of four GPUs and hybrid or VPC deployment for business use.

Q: Does it process images and long documents?

A: Yes, with multimodal input support and 128k token context.

Pricing

Mistral Medium 3 follows a token-based pricing model that ensures enterprise-grade AI performance at significantly reduced costs compared to leading competitors.

Token Type | Price per 1 Million Tokens |

Input Tokens | $0.40 |

Output Tokens | $2.00 |

Alternatives

Claude Sonnet 3.7

Better performance, much higher cost.

Magistral Medium

Mistral's chain-of-thought paywall proprietary reasoning model but for reasoning across multimodal

Llama 4 Maverick

Open-sourced, but lagging on benchmarks and multimodal performance.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.