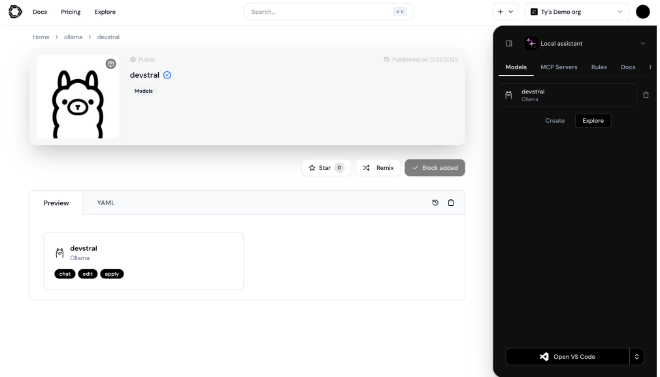

Devstral is an open agentic LLM fine-tuned for real-world software tasks, excelling at code navigation, multi-file edits, and resolving GitHub issues.

Introduction

Devstral is developed atop Mistral Small 3.1 and optimized for agentic coding practice. It has a huge 128 k‑token context window and doesn’t include the vision encoder to keep the focus on text, allowing it to process big, multi‑file codebases. The model is released free under Apache 2.0, and through Mistral’s API with open, low‑cost pricing. Its light architecture enables effortless local or enterprise performance, and its open-source heritage prioritizes both commercial and community use. The forthcoming larger version could bring additional abilities.

Open‑source

Agentic‑AI

Local‑runtime

Developer‑friendly

Review

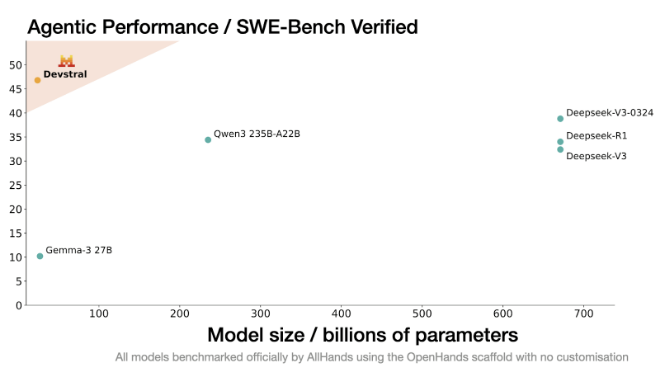

Devstral is an open agentic LLM fine-tuned for real-world software engineering. It performs exceptionally well on codebase navigation, editing multiple files, and solving proven GitHub issues. It beats all the other open-source models on the SWE-Bench Verified benchmark and even GPT‑4.1‑mini by more than 20%. The model executes locally on available hardware like RTX 4090 GPUs or Macs with 32 GB RAM under an Apache 2.0 permissive license. It has good performance, flexibility, and cost-effectiveness—offering developers and teams a very appealing proposition.

Features

Agentic Code Understanding

Resolves actual GitHub problems, follows context between files.

Robust Benchmark Performance

46.8% on SWE-Bench Verified; +6 pts ahead of previous open source; beats GPT‑4.1‑mini by 20%.

Light Local Deployment

Runs on a sole RTX 4090 or 32 GB RAM Mac.

Permissive Apache 2.0 License

Applicable for commercial and personal use.

Flexible Platform Support

Accessible through Hugging Face, Ollama, Kaggle, LM Studio; compatible with vLLM, Transformers, OpenHands.

API Access

Input tokens $0.10/million, output tokens $0.30/million.

Best Suited for

Software Engineers & Dev Teams

Best for developing coding agents, IDE plugins, and bug fix automation.

Enterprises

Local deployment preserves confidentiality and compliance-friendly processes.

AI Enthusiasts

A powerful local AI Playground that encourages experimentation.

Academic / Research Projects

Open‑source under Apache 2.0, ideal for innovation and teaching.

Strengths

Top-tier benchmark performance among open‑source models.

Runs locally on mainstream dev hardware.

Flexible licensing and deployment options.

Multi‑file and context‑aware coding capabilities.

Weakness

Still in research preview, some rough edges to be expected.

Needs quite high‑end GPU or 32 GB RAM to perform well.

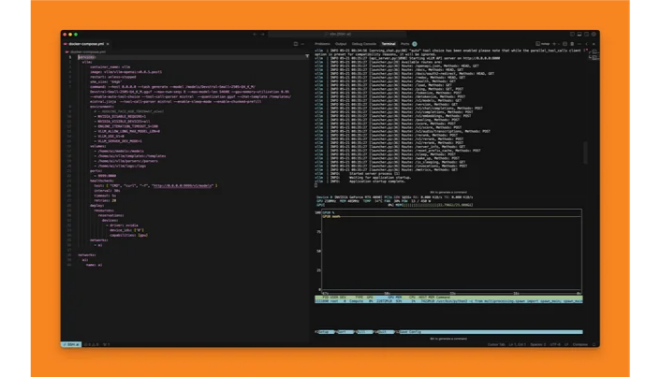

Getting started with: step by step guide

Getting started with Devstral is straight forward:

Step 1: Select Your Environment

Choose deployment through Hugging Face, Ollama, Kaggle, LM Studio, or Mistral API.

Step 2: Install and Load the Model

For local usage, adhere to Hugging Face or Ollama notations; for API usage, ask model devstral-small-2505.

Step 3: Integrate with Tools

Implement OpenHands or SWE-Agent scaffolds to facilitate agentic workflows across codebases.

Step 4: Prompt & Refine

Supply actual coding tasks. Optionally adjust context window or re-prompt to polish outputs.

Step 5: Deploy or Experiment

Deploy within IDE, in pipelines, or experiment with standalone code agents.

Frequently Asked Questions

Q: What is Devstral?

A: An open, 24B‑parameter agentic LLM fine‑tuned for software dev tasks.

Q: Is Devstral free to use?

A: Yes, weights are Apache 2.0; API usage is metered.

Q: What hardware does it require?

A: Optimized for RTX 4090 GPUs or Macs with ≥32 GB RAM.

Pricing

Devstral is free to use locally under the Apache 2.0 license, with commercial-friendly terms. For API access, pricing is simple and developer-friendly, billed per million tokens.

API Input Tokens

$0.10 per million tokens.

API Output Tokens

$0.30 per million tokens.

Local Usage

Free, open weights available for deployment via Hugging Face, Ollama, LM Studio, and others.

Mistral does not enforce usage limits on local deployments, making Devstral ideal for teams preferring on-premises inference with full control over data and compute.

Alternatives

Codestral (Mistral)

Optimized for FIM and test generation, but less agentic.

GPT‑4.1‑mini

Strong coding model, but proprietary and unable to run locally.

Google Gemma 3

Similar benchmarks, not tuned for full-stack agentic workflows.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.