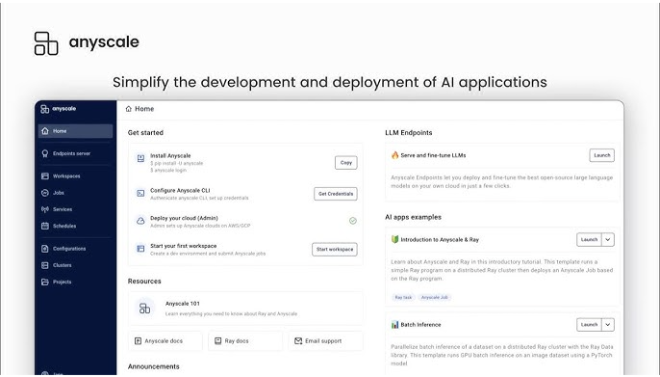

Anyscale Endpoints is a high-performance service designed for developers to integrate open-source large language models (LLMs) into their applications

Introduction

As open-source AI models continue to close the gap with proprietary giants, the challenge for enterprises has shifted from model quality to deployment efficiency. Running these models at scale requires specialized infrastructure and deep expertise in GPU orchestration. Anyscale Endpoints bridges this gap by offering a fully managed, serverless platform for open-source LLM inference. Leveraging the power of the Ray framework, Anyscale provides developers with a robust, scalable, and cost-efficient way to deploy models like Llama 3 and Mistral in production environments. For digital marketers, software engineers, and business decision-makers, Anyscale Endpoints offers a path to high-performance AI integration that remains flexible, secure, and focused on operational excellence.

Ray-Powered

Open-Source Focused

Ultra-Low Latency

Enterprise-Grade Scaling

Review

Anyscale Endpoints is a high-performance, managed service designed for developers who need to integrate open-source large language models (LLMs) into their applications without the operational burden of managing GPUs. Built by the creators of Ray, the industry-standard framework for scaling AI workloads, Anyscale Endpoints is engineered for massive concurrency and ultra-low latency. It provides a curated selection of state-of-the-art models, including the Llama 3 family and Mixtral, all accessible via an OpenAI-compatible API for seamless integration.

The platform stands out by offering a “serverless” experience that automatically handles the complexities of autoscaling and hardware optimization. This allows teams to move from prototyping to production scales—supporting thousands of requests per second—with a single line of code. While it is a developer-centric tool requiring API knowledge, its focus on providing the most cost-effective and fastest inference for open-source models makes it a top-tier choice for businesses looking to avoid vendor lock-in with proprietary AI providers.

Features

OpenAI-Compatible API

Allows developers to switch from proprietary models to open-source alternatives with minimal code changes using familiar SDKs.

Optimized Ray Runtime

Utilizes the Ray ecosystem to provide industry-leading throughput and latency, ensuring applications remain responsive even under heavy load.

Curated Model Selection

Instant access to the latest and most capable open-source models, including Llama 3 (8B, 70B, 405B) and Mixtral 8x22B.

Automatic Autoscaling

The serverless architecture instantly scales GPU resources up or down based on traffic, eliminating "cold starts" and manual cluster management.

Fine-Tuning Support

Enables users to easily customize open-source models on their proprietary datasets for specialized domain knowledge or brand voice.

Enterprise Security & Compliance

Offers SOC2 compliance and the option to deploy in your own Virtual Private Cloud (VPC) for maximum data privacy.

Best Suited for

Software Engineering Teams

Building real-time AI features like coding assistants or chatbots that require high-speed model responses.

SaaS Product Leaders

Scaling from zero to millions of users without needing a dedicated DevOps or ML infrastructure team.

Enterprise Solution Architects

Seeking to reduce "AI spend" by migrating high-volume workloads from expensive proprietary models to open-source alternatives.

Data Scientists

Performing large-scale batch processing, such as analyzing millions of customer reviews or generating embeddings for RAG systems.

SaaS Product Managers

Looking to add AI capabilities while maintaining strict control over data privacy and avoiding vendor lock-in.

AG (Retrieval Augmented Generation) Builders

Powering the "reasoning" layer of knowledge bases with reliable, high-speed LLM endpoints.

Strengths

Unmatched Scalability

Superior Cost-Efficiency

Ease of Migration

State-of-the-Art Infrastructure

Weakness

Developer-Only Tool

Limited to Supported Open-Source Models

Getting Started with Anyscale Endpoints: Step-by-Step Guide

Step 1: Create an Account and Get API Key

Sign up at the Anyscale website. Navigate to the Endpoints dashboard to generate your unique API key.

Step 2: Choose Your Model

Select your target model from the list of supported LLMs (e.g., meta-llama/Llama-3-70b-chat-hf) based on your accuracy and speed needs.

Step 3: Update Your API Base URL

In your application code, replace the standard OpenAI base URL with Anyscale’s endpoint: https://api.endpoints.anyscale.com/v1.

Step 4: Run Your First Inference

Use your preferred Python or Node.js SDK to send a request. The platform will automatically provision the necessary GPU resources and return the result.

Step 5: Monitor and Scale

Use the Anyscale dashboard to monitor your token usage, latency, and costs in real-time as your application scales to production.

Frequently Asked Questions

Q: What is the relationship between Anyscale and Ray?

A: Anyscale is the commercial company founded by the creators of Ray, the open-source distributed computing framework used to train and serve models like ChatGPT.

Q: Is Anyscale Endpoints better than ChatGPT?

A: For many production use cases, it is more flexible and cost-effective. While ChatGPT is a finished product, Anyscale Endpoints is the infrastructure that lets you build your own high-performance products using open-source models.

Q: Does Anyscale support fine-tuning?

A: Yes, Anyscale Endpoints provides a managed service for fine-tuning open-source models, allowing you to train them on your specific business data.

Pricing

Anyscale Endpoints uses a simple, pay-as-you-go consumption model based on the number of tokens processed.

| Model Tier | Example Model | Price per 1M Input Tokens | Price per 1M Output Tokens |

| Small Models | Llama 3 8B | $0.15 | $0.15 |

| Medium Models | Mixtral 8x7B | $0.50 | $0.50 |

| Large Models | Llama 3 70B | $1.00 | $1.00 |

| Frontier OSS | Llama 3 405B | $5.00 | $5.00 |

Note: Dedicated “Private Endpoints” on reserved hardware are available for enterprise customers with custom pricing.

Alternatives

Fireworks AI

A direct competitor known for extreme inference speed and a deep library of optimized open-source models.

Together AI

Offers one of the largest selections of open-source models with a strong focus on community and developer flexibility.

OctoAI (NVIDIA)

Focuses on model-level optimization and "OctoStack" for deploying models efficiently across different cloud and hardware types.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.

Anyscale Endpoints

Sale Has Ended