Cohere Command R+ is a large language model (LLM) specifically engineered for complex enterprise workloads that demand high performance and reasoning.

Introduction

For technology leaders and developers tasked with deploying reliable, scalable AI solutions, the journey often stalls at ensuring accuracy and controlling model behavior. Simple chatbots are insufficient for solving multi-step, complex business processes. Cohere Command R+ addresses this by providing a highly capable LLM optimized specifically for enterprise infrastructure and application development. Command R+ sets itself apart through two core capabilities: enhanced Retrieval Augmented Generation (RAG) for factual accuracy, and sophisticated Multi-Step Tool Use for automating intricate business workflows. This LLM is not merely a conversational engine; it is a powerful reasoning layer designed to integrate with proprietary data, execute sequential actions against external systems, and operate effectively across the 10 key languages crucial for global business. By focusing on performance, safety, and verifiable results, Command R+ is positioning itself as the trusted intelligence layer for the next generation of enterprise AI applications.

Enterprise LLM

RAG Optimized

Multi-Step Agents

128K Context

Review

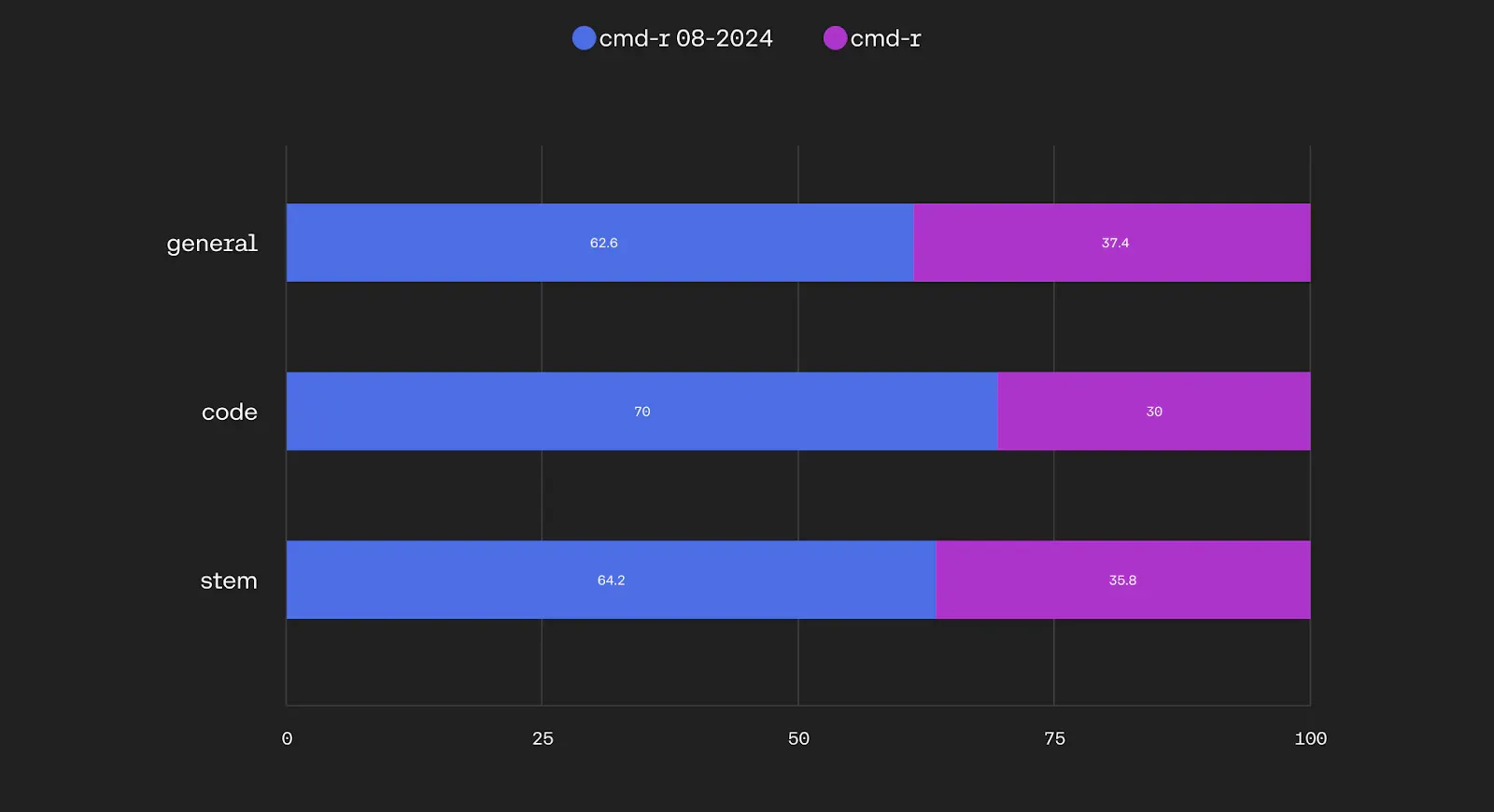

Cohere Command R+ is a large language model (LLM) specifically engineered for complex enterprise workloads that demand high performance and reasoning. Released to move companies beyond proof-of-concept and into production, the model is characterized by an exceptionally long 128,000-token context window and advanced multi-step capabilities. Unlike general-purpose models, Command R+ is heavily optimized for Retrieval Augmented Generation (RAG), meaning it excels at grounding its answers in external, proprietary documents and generating citations to reduce hallucinations.

This makes it a foundational component for building accurate, trustworthy conversational AI and sophisticated AI agents. While its token-based pricing is significantly higher than its lower-tier counterpart, Command R, the cost is justified by its superior performance in complex reasoning, advanced multilingual RAG, and multi-step tool use functionality. It is the premier choice for organizations building mission-critical applications that require verifiable outputs.

Features

Retrieval Augmented Generation (RAG) Optimization

Optimized for high-precision RAG workflows, enabling the model to retrieve information from supplied documents and generate reliable answers with citations.

128,000-Token Context Window

Supports an extremely long conversation history and large document processing, essential for complex tasks and analyzing lengthy proprietary knowledge bases.

Multi-Step Tool Use (Agentic Capabilities)

Allows the model to execute a sequence of actions by calling multiple user-defined tools or APIs in a chain, which is key for automating complex business processes.

Multilingual Coverage

Optimized for high performance across 10 key global business languages, including English, French, Spanish, German, Japanese, and Chinese, with support for 13 additional languages.

Structured Outputs

Capable of generating responses in specific, machine-readable formats like JSON, enabling seamless integration with other software applications and internal data pipelines.

Safety Modes (Contextual and Strict)

Offers configurable safety filters, allowing developers to choose between a "Contextual" mode for creative use or a "Strict" mode better suited for corporate and customer service applications.

Best Suited for

Enterprise AI Agent Development

Building sophisticated AI agents that can manage multi-step processes across various internal systems.

Complex Internal Knowledge Retrieval

Powering internal Q&A systems where accuracy and the ability to cite sources from private documents are non-negotiable.

Software Development

Generating, reviewing, and reasoning about complex code with enhanced mathematical and coding skills.

Long-Form Document Analysis

Processing and summarizing ultra-long legal, financial, or technical documents that require the 128K token context window.

Multilingual Customer Service

Deploying conversational AI or agents that require high-quality understanding and response generation across the 10 key supported global languages.

Financial and Legal Tech

Applications demanding verifiable outputs and reasoning, where grounding generations in source data is critical for compliance.

Strengths

State-of-the-Art RAG Performance

Advanced Agentic Capabilities

Unmatched Context Length

Production-Ready Enterprise Focus

Weakness

High, Unpredictable Token Cost

Limited to API Integration

Getting Started with Cohere Command R+: Step-by-Step Guide

Deployment requires a developer-centric workflow focusing on the API.

Step 1: Obtain an API Key

Sign up for a Cohere account to get access to the API key, which is necessary to authenticate all requests. Trial usage is often free but limited.

Step 2: Access the Playground for Experimentation

Use the Cohere dashboard Playground to test prompts, experiment with different RAG and tool-use configurations, and understand the model’s behavior before writing any code.

Step 3: Define External Tools (For Agents)

For agentic workflows, define the available external tools (e.g., CRM API, database search) and their functions as JSON objects, which the model will use to generate action payloads.

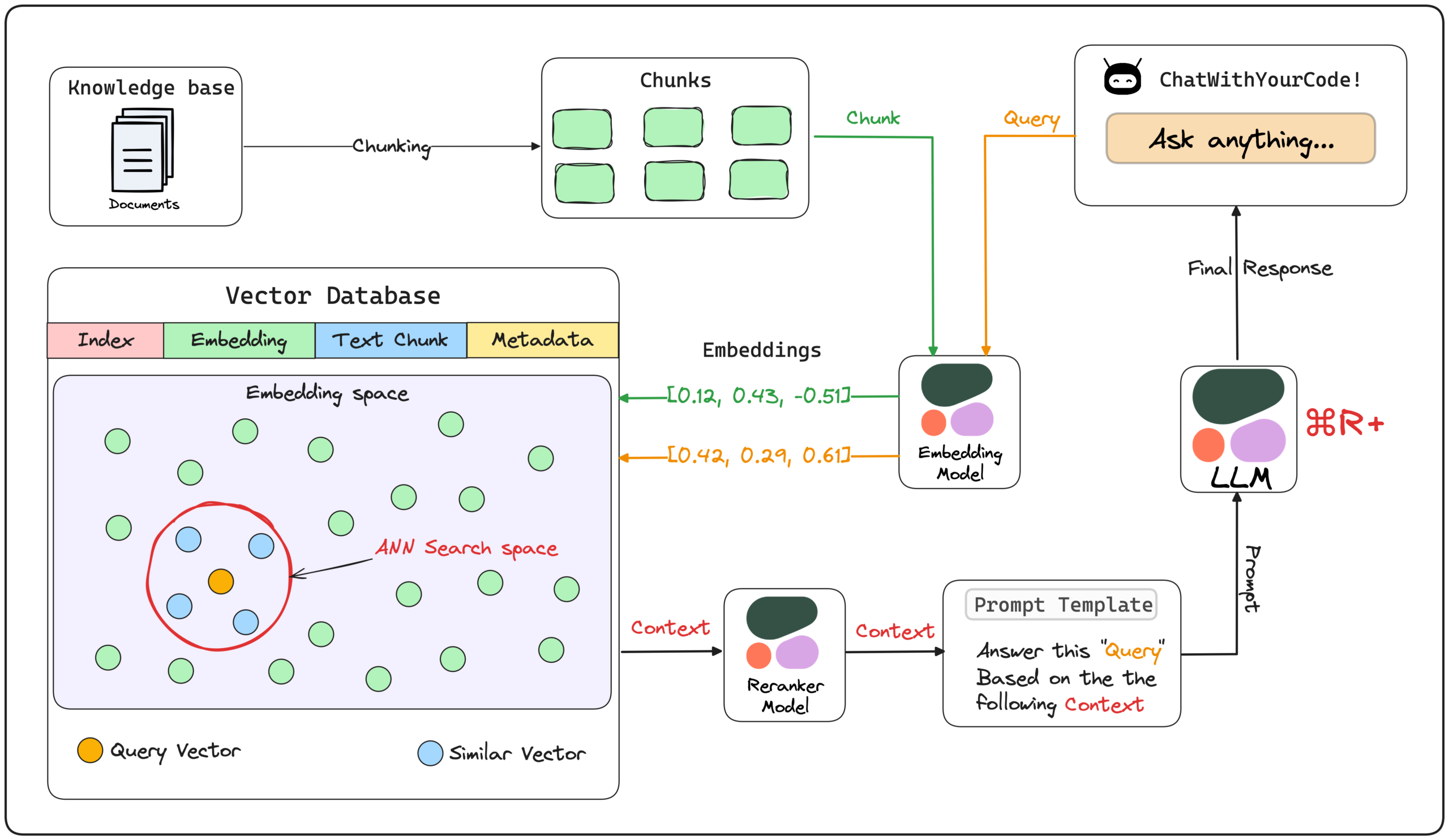

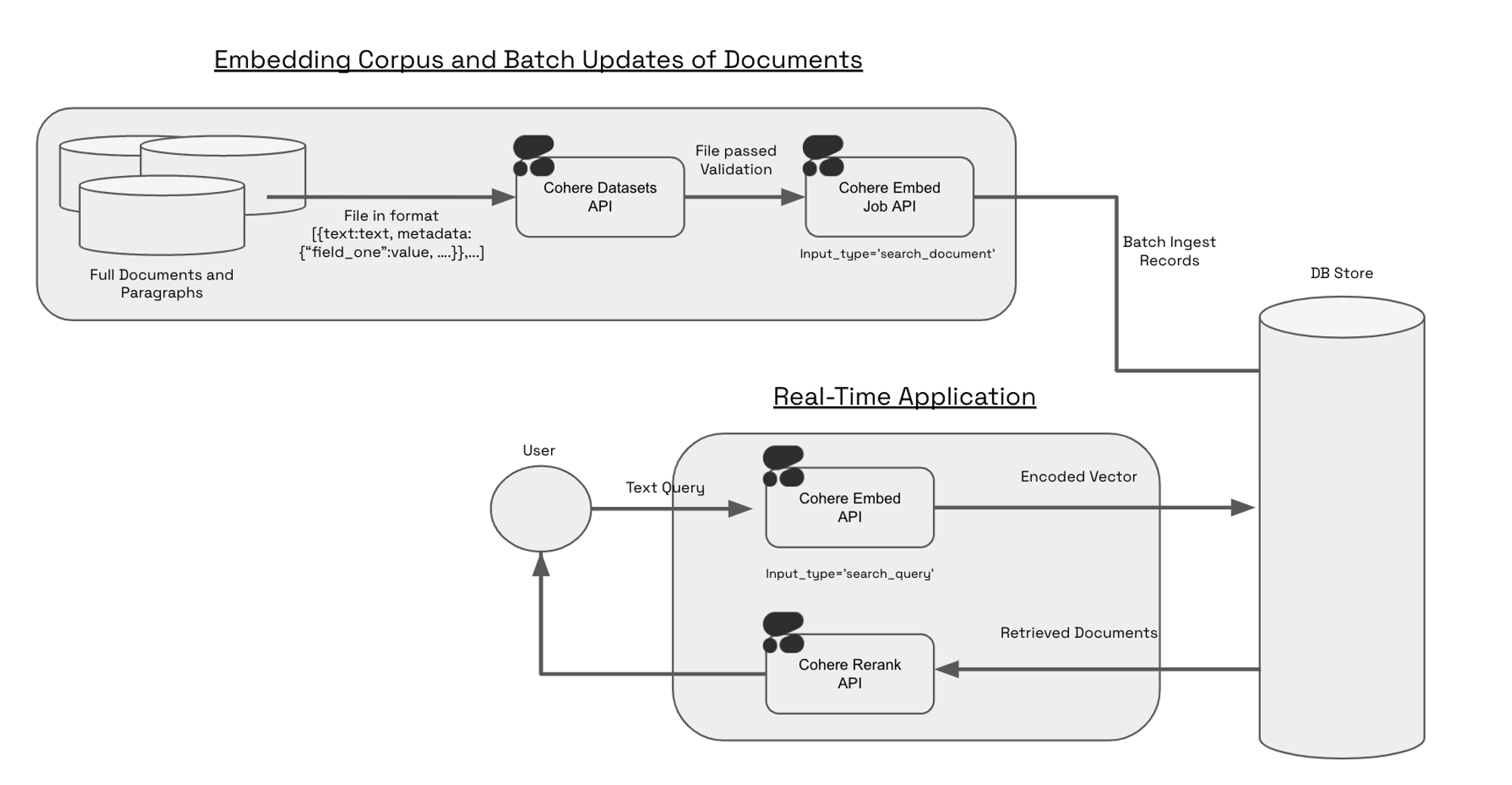

Step 4: Implement RAG Pipeline and Document Chunking

Integrate the RAG pipeline using supporting models (Cohere Embed, Rerank) and tools like LlamaIndex, which requires chunking proprietary documents into small, searchable segments.

Step 5: Deploy via API or Cloud Service

Deploy the model using the Cohere API or through dedicated cloud platforms like Amazon SageMaker JumpStart for a secure, scalable production endpoint.

Frequently Asked Questions

Q: What is the primary function of Cohere Command R+?

A: Command R+ is a high-performance LLM optimized for complex enterprise tasks, specializing in generating accurate, verifiable answers using Retrieval Augmented Generation (RAG) and automating tasks with multi-step tool use.

Q: What is the significance of the 128K context window?

A: The 128,000-token context window allows the model to process approximately 100,000 words in a single interaction, enabling it to maintain context over long conversations and analyze very large documents accurately.

Q: Does Command R+ provide citations in its responses?

A: Yes, a core feature of Command R+’s RAG optimization is its ability to include citations in its generated responses, pointing back to the specific source documents to ensure factual accuracy and verifiability.

Pricing

Cohere Command R+ uses a pay-as-you-go, token-based pricing structure, differentiating between input (the prompt you send) and output (the response generated by the model).

Model | Use Case | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) |

Command R+ | High-performance, complex tasks, and multi-step agents | $2.50 | $10.00 |

Command R | Balanced performance for RAG and simpler tool use | $0.15 | $0.60 |

Note: The actual price of an interaction is the sum of the input and output token costs.

Alternatives

OpenAI (GPT-4/GPT-4o)

Provides state-of-the-art models with excellent creative capabilities and a widely adopted ecosystem, though RAG grounding is often a custom integration.

Mistral AI (Mistral Large)

Offers powerful models focused on fast inference and strong reasoning, appealing to teams that value high performance and cost efficiency.

Google (Gemini Series/Vertex AI)

A multimodal powerhouse with strong reasoning and direct integration into the robust and scalable Google Cloud infrastructure (Vertex AI).

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.

Cohere Command R+

Sale Has Ended