Replicate is a powerful platform that is radically simplifying the deployment of machine learning models via a cloud API.

Introduction

Deploying a complex machine learning model into a production environment is notoriously difficult, involving Docker, GPU management, auto-scaling, and maintaining low latency. Replicate was built to abstract away this infrastructure complexity entirely. It allows developers to treat advanced AI models—which are often complex Python scripts—like simple, callable functions.

Replicate democratizes access to state-of-the-art AI by leveraging its open-source tool, Cog, which containers models into a standard, production-ready format. This ecosystem provides a rich model library, fine-tuning capabilities, and a robust API for integration into any application. For startups and enterprises alike, Replicate is the shortcut to leveraging the latest AI breakthroughs and ensuring their applications are always running on the most efficient and powerful hardware available.

API Deployment

Open Source Models

LLM Hosting

Per-Second Billing

Review

Replicate is a powerful platform that is radically simplifying the deployment of machine learning models via a cloud API. Founded in 2018 by Ben Firshman and Andreas Jansson, Replicate acts as a unified gateway to running thousands of open-source and proprietary models—from cutting-edge generative AI like FLUX and Imagen to large language models (LLMs) like Llama 3—without requiring developers to manage any complex infrastructure.

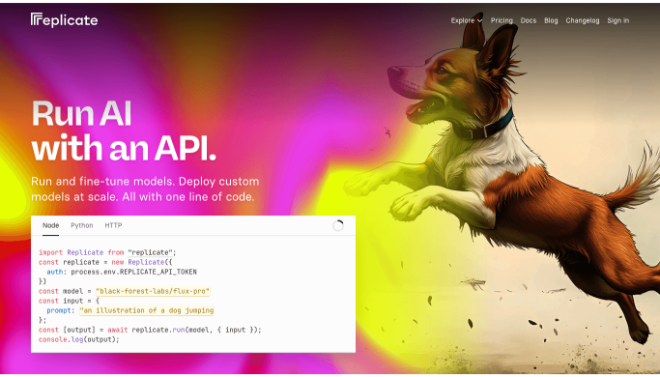

The core value is its ease of use: developers can run or fine-tune any model with just a few lines of code (in Python, Node, Go, or Swift). Pricing is based on the hardware used and the time the model runs (per-second billing), offering transparent, pay-as-you-go costs. While the vast number of community models can sometimes present licensing ambiguity, Replicate is the definitive tool for developers seeking to rapidly integrate high-performance AI capabilities into their apps, websites, or serverless functions.

Features

Unified Cloud API

Provides a single, clean API endpoint for running predictions across thousands of machine learning models with just one line of code.

Cog Model Packaging

Leverages the open-source tool Cog to containerize models into standard, production-ready Docker containers, ensuring maximum reproducibility and portability.

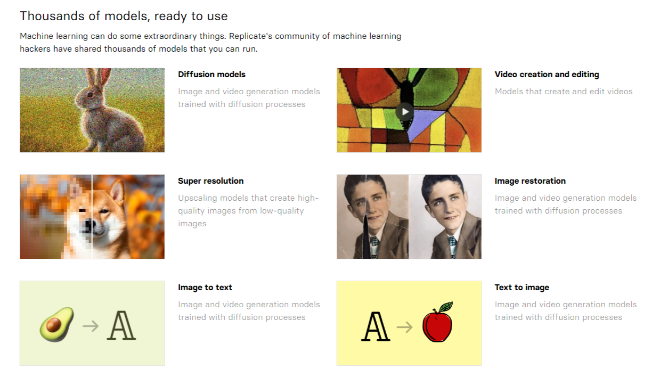

Vast Model Library

Hosts a continuously updated catalog of models for every task (image generation, video, LLMs, upscaling, speech), including major official models like Imagen and FLUX.

Fine-Tuning Capability

Allows users to bring their own training data to easily fine-tune existing models (like Stable Diffusion or Llama) to create specialized versions.

Per-Second Billing

Provides access to a broad range of GPUs, from cost-effective consumer cards (RTX 4090) to top-tier data center accelerators (NVIDIA A100, H100, H200).

Webhooks for Asynchronous Jobs

Supports webhooks to handle long-running predictions (like video generation or training) asynchronously, preventing application timeouts.

Best Suited for

Software Developers & Web Engineers

To easily integrate cutting-edge AI features (e.g., image generation) into web, mobile, or backend applications via a simple API.

AI Startups & Product Teams

For rapidly testing, prototyping, and deploying the latest open-source models into their Minimum Viable Products (MVPs).

Creative Developers & Artists

To access and customize the latest generative AI models (image, video, music) without managing local GPU hardware.

Chatbot & Agent Developers

To seamlessly deploy and manage high-performance LLMs (e.g., Llama 3) for inference at scale.

Researchers

For creating reproducible, shareable, and easily runnable versions of their custom models for the community.

DevOps Engineers

To automate model testing and pushing new model versions using the Cog CLI and GitHub Actions integration.

Strengths

Simplicity and Speed

Open Source Focus

Transparent Usage

Production-Ready

Weakness

Vast Model Licensing

Cold Boot Latency

Getting Started with Replicate: Step by Step Guide

Getting started with Replicate allows you to run your first model prediction in minutes.

Step 1: Sign Up and Get API Key

Create a Replicate account and retrieve your API token, which will be used for authentication in your code.

Step 2: Choose a Model

Browse the model library and select a model (e.g., a text-to-image generator). Note the model path (e.g., black-forest-labs/flux-pro).

Step 3: Install the Client Library

Install the client library for your preferred language (e.g., pip install replicate for Python).

Step 4: Run the Prediction

Use the client library to call the model’s prediction API with your inputs. This requires only a few lines of code:

Python

import replicate

output = replicate.run(“model_path/version”, input={“prompt”: “Your idea”})

Step 5: Integrate and Monitor

Integrate the model call into your application. For long jobs, use webhooks to receive the result when the prediction is complete.

Frequently Asked Questions

Q: What is Cog?

A: Cog is Replicate’s open-source tool for packaging machine learning models and their dependencies into standard, production-ready Docker containers, making them universally deployable.

Q: How can I avoid "cold boot" delays?

A: Cold boots occur when a GPU needs to spin up. For real-time applications, you can use Replicate’s Official Models (which are kept “warm” and ready) or configure workers to remain active for a longer period (at an increased cost).

Q: Can I use Replicate for long-term training runs?

A: Yes, Replicate supports model training and long-running jobs. You can initiate a training job and use a webhook to get notified when the job is complete, which saves you from keeping a persistent connection open.

Pricing

Replicate uses a simple, pay-as-you-go pricing structure based on the type of GPU used, billed down to the second.

Hardware Type | Key Use Cases |

CPU (Small) | Quick tests, model pre-processing, simple inference. |

Nvidia T4 GPU | Entry-level generative AI, smaller model inference. |

Nvidia L40S GPU | High-performance inference, fine-tuning large models. |

Nvidia A100 (80GB) | Large-scale model training and distributed compute. |

Official Models | Priced by output (e.g., $\text{\$0.09}$/image), not time, for predictable costs. |

Alternatives

Hugging Face Inference Endpoints

Provides a similar service for deploying models from the Hugging Face hub, focusing heavily on LLMs and transparency.

Google Cloud Vertex AI

A full MLOps platform offering robust model deployment, but typically involves more complex setup and higher costs for basic API inference.

RunPod/CoreWeave

GPU cloud providers that rent raw GPU instances (Pods) by the hour, giving the user full infrastructure control, whereas Replicate provides a simplified API wrapper.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.