RunPod is a developer-centric, cloud GPU provider that has emerged as a powerhouse for AI, Machine Learning, and Generative AI workloads.

Introduction

Training and running modern AI models, especially large language models (LLMs) and diffusion models, require immense computational power from Graphics Processing Units (GPUs). Acquiring and maintaining this hardware is often prohibitively expensive. RunPod solves this by providing on-demand, scalable GPU instances (Pods) at highly competitive rates, billed by the second.

RunPod’s platform is designed by developers for developers. It eliminates infrastructure friction through Docker-based containers and pre-configured environments, allowing users to launch a powerful GPU instance in under a minute. By focusing on cost efficiency, a wide selection of GPUs, and a transparent pricing structure, RunPod has positioned itself as the go-to platform for AI engineers, researchers, and hobbyists looking to accelerate their deep learning projects without the typical cloud complexity or hidden costs.

GPU Cloud

AI/ML Infrastructure

Per-Second Billing

Serverless

Review

RunPod is a developer-centric, cloud GPU provider that has emerged as a powerhouse for AI, Machine Learning, and Generative AI workloads. Founded in 2022 by Zhen Lu and Pardeep Singh, RunPod’s core mission is to democratize access to high-performance GPU computing by offering a more cost-effective and flexible alternative to hyperscalers like AWS or Azure.

RunPod’s major value lies in its transparency and pricing model: per-second billing and zero data egress fees, making it exceptionally cost-efficient for short experiments and large-scale data transfers. It offers a huge variety of cutting-edge NVIDIA GPUs (A100, H100, RTX series) via its Secure Cloud (for enterprises) and Community Cloud (for budget-conscious R&D). While the platform’s sheer flexibility and depth of configuration can be overwhelming for total beginners, its use of pre-configured templates and containerized environments makes deploying even complex models like ComfyUI and LLMs simple and fast.

Features

Per-Second Billing

Charges compute time down to the second, optimizing costs for short tests, batch jobs, and rapid experimentation.

Zero Data Egress Fees

Eliminates costly data transfer fees, which are often a major, unpredictable expense when dealing with large training datasets on other cloud platforms.

Secure & Community Cloud

Offers two distinct environments: the highly reliable, enterprise-grade Secure Cloud and the lower-cost, peer-to-peer Community Cloud for budget flexibility.

Instant GPU Pods

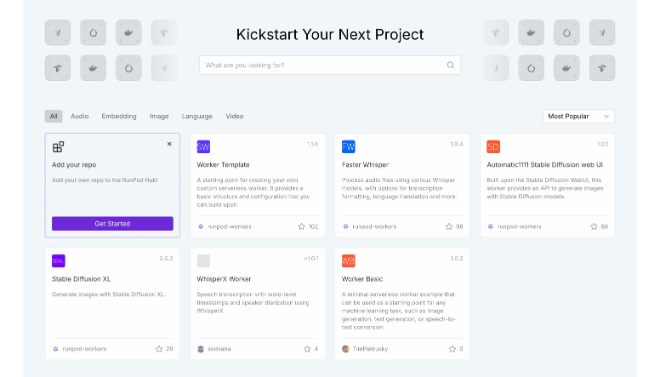

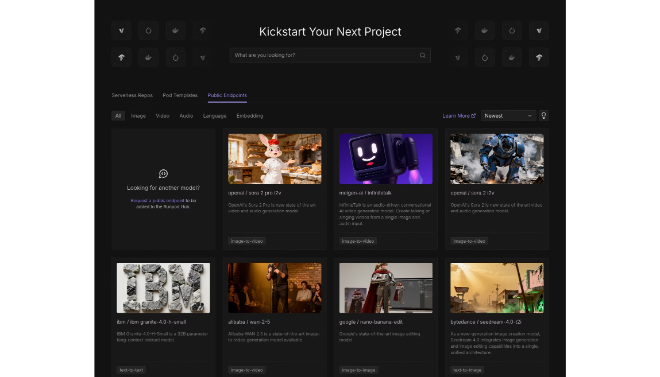

Allows users to deploy fully configured, containerized GPU instances from pre-built templates (e.g., PyTorch, ComfyUI, LLM Web UIs) in seconds.

Wide GPU Variety

Provides access to a broad range of GPUs, from cost-effective consumer cards (RTX 4090) to top-tier data center accelerators (NVIDIA A100, H100, H200).

Serverless & Spot Options

Features Serverless compute for bursty, auto-scaling inference workloads, and Spot Instances for significant discounts on non-critical, fault-tolerant jobs.

Best Suited for

AI/ML Engineers & Researchers

To train, fine-tune, and iterate on deep learning models (LLMs, Diffusion) cost-effectively and without infrastructure management overhead.

Generative AI Developers

Ideal for running and customizing complex pipelines like Stable Diffusion and ComfyUI using high-VRAM, affordable GPUs.

Cost-Sensitive Startups

Provides high-performance compute at prices significantly lower than hyperscalers, preserving runway for high-cost training.

Students & Hobbyists

The Community Cloud offers an accessible, low-cost entry point for learning and experimenting with large AI models.

LLM Deployment Teams

The Serverless feature is perfect for deploying LLM inference endpoints that scale instantly with real-time traffic demand.

Data Scientists

For executing large-scale data processing or batch jobs where per-second billing ensures maximum cost efficiency.

Strengths

Unbeatable Price/Performance

Developer Control

Ease of Deployment

Hardware Availability

Weakness

Configuration Complexity

Community Cloud Reliability

Getting Started with RunPod: Step by Step Guide

Getting started with RunPod involves launching a pre-configured GPU Pod.

Step 1: Sign Up and Add Credit

Create a RunPod account and add credit to your balance, as the platform operates on a pay-as-you-go model.

Step 2: Choose Your Compute Type

Select “Secure Cloud” for critical jobs or “Community Cloud” for the lowest rates.

Step 3: Select a GPU and Template

Choose your desired GPU (e.g., A100 (80GB) for training or RTX 4090 for image generation). Select a pre-configured template like “RunPod PyTorch” or “ComfyUI.”

Step 4: Configure Storage and Launch

Configure a Network Volume (persistent storage) to save your data and models. Click “Deploy” to launch your Pod instance, which typically starts in seconds.

Step 5: Connect to the Pod

Access your environment via the Jupyter Lab interface (browser-based terminal and file editor) or connect via SSH to begin running your AI workloads.

Frequently Asked Questions

Q: What is the difference between Secure Cloud and Community Cloud?

A: Secure Cloud uses enterprise-grade data centers for higher reliability and compliance (higher cost), while Community Cloud uses vetted, crowdsourced GPU hosts for significantly lower prices (lower cost, variable reliability).

Q: Are there data egress (data download) fees?

A: No. RunPod is famous for offering zero data ingress and egress fees, which provides huge cost savings for moving large datasets.

Q: Can I stop my Pod and resume work later?

A: Yes. By attaching a Persistent Network Volume (storage) to your Pod, you can stop the Pod (stopping the compute billing) and relaunch it later, resuming your work exactly where you left off.

Pricing

RunPod uses a transparent, usage-based model with different rates for its core services.

Cloud GPU Pods

Per-Second Billing

Non-interruptible, highly reliable compute.

Community Pods

Per-Second Billing

Highly discounted, variable reliability.

Serverless

Per-Second Billing

Ideal for auto-scaling inference workloads.

Persistent Storage

Per-GB/Month

Network Volume (data is saved even when the Pod is stopped).

Alternatives

CoreWeave

A major "neocloud" provider focused on high-scale GPU compute for enterprises, known for low-latency provisioning and strong NVIDIA backing.

Vast.ai

A decentralized marketplace for renting community GPUs, offering a much wider, often cheaper, variety of consumer GPUs but with varying reliability.

Lambda Labs

A pure-play GPU cloud provider known for its simple pricing, focus on deep learning, and one-click GPU cluster deployments.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.