Qwen 2.5 Max is the flagship Mixture-of-Experts (MoE) AI of Alibaba designed to compete with industry-leading systems such as GPT‑4o, Claude 3.5

Introduction

Qwen 2.5 Max is meant to straddle performance and efficiency. Based on MoE architecture, it employs specialist “experts” instantiated per task to trade off computation load and intelligence. The model performs its best benchmark performance without showing an explicit reasoning trace, providing results that are intuitive and highly aligned.

Available through Qwen Chat or as an API with OpenAI-compatible endpoints, Qwen 2.5 Max is intended to be simple to use and integrate. Users can try it for free on the web, then scale to heavier usage through Alibaba Cloud’s pay-as-you-go infrastructure.

Freemium Model

API‑Ready

Efficient Scaling

Multilingual

High‑Capacity Context

Review

Qwen 2.5 Max is the flagship Mixture-of-Experts (MoE) AI of Alibaba designed to compete with industry-leading systems such as GPT‑4o, Claude 3.5 Trained on more than 20 trillion tokens and fine-tuned through supervised fine-tuning and RLHF, it outperforms its competitors across benchmarks ranging from general knowledge, coding, and mathematics to human preference tasks, frequently beating its competition. It has huge 128 K‑token context windows and supports 29+ languages. Although not open‑source, it’s openly available through Qwen Chat and Alibaba Cloud API. Its performance and efficiency make it a robust developer choice, enterprise solution, and creative team member.

Features

MoE Architecture

Activates relevant expert modules dynamically for each task, improving scalability and efficiency.

Massive Training Corpus

Pretrained on 20+ trillion tokens with further tuning via SFT and RLHF.

128K‑Token Context

Handles entire documents, codebases, or datasets in a single session.

Benchmarked Excellence

Highest marks in Arena‑Hard (89.4), LiveBench (62.2), and MMLU‑Pro (76.1), outpacing or being neck‑and‑neck with top competitors.

Multilingual Support

Speaks more than 29 languages without missing a beat.

OpenAI‑API Compatible

Seamless integration for apps already taking advantage of GPT‑style APIs.

Best Suited for

Enterprise Developers

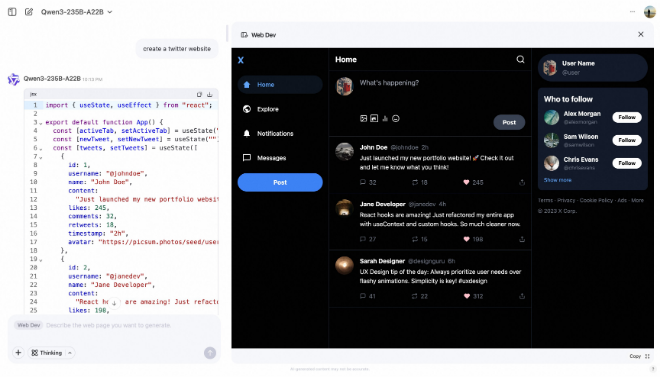

Build multilingual chatbots, assistants, or automation tools via API.

Content Teams & Marketers

Generate reports, blogs, or ad copy at scale.

Coders & Analysts

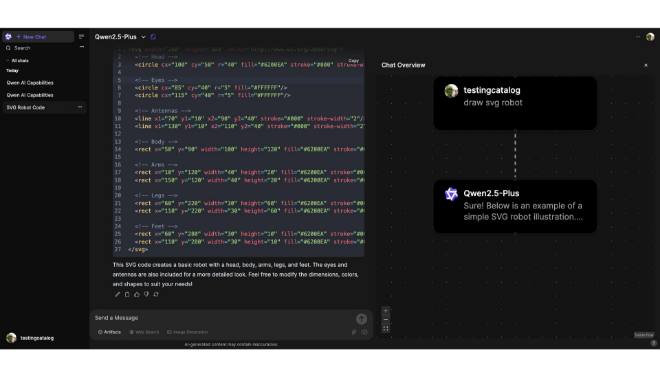

Handle code generation, debugging, and data analysis with contextual awareness.

Researchers & Academics

Process long documents and large corpora without truncation.

Multilingual Services

Ideal for translation, localization, and world‑wide customer support.

Strengths

Leads or is near the lead in major AI benchmarks.

MoE strategy cuts compute cost while improving scale.

128K tokens enables deep, coherent interaction.

Seamless integration to current OpenAI‑based processes.

Weakness

Not open-sourced; can’t be executed locally or fine-tuned separately.

Missing step-by-step chain-of-thought output.

Getting started with: step by step guide

Getting started with Qwen is easy:

Step 1: Visit Qwen Chat

Access Qwen Chat via your browser and choose “Qwen 2.5 Max” from the model dropdown. Chat for free to begin exploration.

Step 2: Register Alibaba Cloud & Enable Model Studio

Sign up for an Alibaba Cloud account, enable Model Studio, and create your API key.

Step 3: Integrate via OpenAI-Compatible API

Utilize provided endpoints (e.g., model name “qwen‑max‑2025‑01‑25”) with your key in your current OpenAI-compatible codebase.

Step 4: Adjust Parameters

Tweak temperature, max_tokens, and other parameters to adjust output quality and style.

Step 5: Track Usage & Scale

Track usage and spend using Alibaba’s console. Scale when production workloads are ready.

Frequently Asked Questions

Q: Is it open-source?

A: No, Qwen 2.5 Max is proprietary. Alibaba has open weights planned but hasn’t published them yet.

Q: Can I fine-tune it?

A: No, only available through API; no local fine-tuning or weight adjustment.

Q: How much context can it process?

A: Up to 128K tokens per session.

Pricing

Qwen 2.5 Max can be accessed using pay-as-you-go API on Alibaba Cloud. Reportedly, users find it costs about one-tenth the price of GPT‑4 pricing per equivalent usage.

Alternatives

GPT‑4o

Closed-weight, highly capable but pricier, proprietary.

Claude 3.5 Sonnet

Capable generalist, chain‑of‑thought strong, closed‑source.

DeepSeek V3

Open‑weight MoE with similar benchmarks, fully open.

Share it on social media:

Questions and answers of the customers

There are no questions yet. Be the first to ask a question about this product.